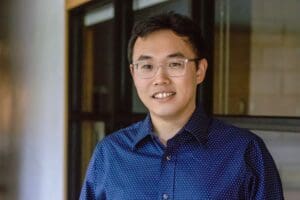

I am an Assistant Professor in the Department of Computer and Information Science at the University of Michigan-Dearborn, where I lead the Trustworthy AIoT Lab (TAI Lab). Before joining UM-Dearborn, I was a Postdoctoral Associate at Duke University. I obtained my Ph.D. degree in the Computer Science and Engineering at Michigan State University, my M.S. degree at Northwestern Polytechnical University and B.E. degree with honor at Taiyuan University of Technology. I completed my PhD within 5 years and I’ve kept my research topic since I was an undergraudate student who majored in IoT engineering.

My current research interests are mobile computing, AIoT, Cyber Physical Systems, AI-assisted sensing/localization, and HCI. My previous work focused on exploring spatial-temporal diversities in Optical Wireless Communication (OWC) for its improved communication performance and enabled sensing/localization. My vision is that light can provide the secure and location-aware communication for Internet-of-Things and human-centered mobile computing. With explored OWC’s spatial-temporal diversities and machine learning, we can use light as promising medium for next-generation wireless networks with broad applications (e.g., LiFi, V2X networks, underwater navigation, digital health, smart city, HCI, and AR/VR).

Some of my projects are detailed below.

(1) HoloCube is an innovative optical wireless communication (OWC) system designed to enable 3D Internet of Things (IoT) connectivity using software-defined optical camera communication and the Pepper’s Ghost effect. Unlike conventional OWC systems that treat the transmitter as a point source, HoloCube employs virtual 3D hollow cubes with adaptive Spatial-Color Shift Keying (SCSK) modulation. These cubes maintain a consistent structure when viewed from different angles while dynamically embedding distinct data over time. The cube’s positioning elements provide dual references for 3D reconstruction (spatial) and robust color decoding (spectral). Experimental results demonstrate that HoloCube achieves reliable omnidirectional IoT connections with a goodput of 70 Kbps over a 4-meter range in real-world indoor settings, offering an efficient and secure solution for mobile IoT applications.

(2) RoFin is an innovative system designed for real-time 3D hand pose reconstruction, leveraging six temporal-spatial 2D rolling fingertips for precise tracking of 20 hand joints. Unlike traditional vision-based approaches limited by low sampling rates (60 Hz), poor performance in low-light conditions, and restricted detection ranges, RoFin uses active optical labeling for finger identification and high rolling shutter rates (5-8 KHz) to enhance 3D location tracking. Implemented with wearable gloves featuring low-power LED nodes and commercial cameras, RoFin demonstrates significant advancements in human-computer interaction (HCI), including applications like multi-user interfaces and virtual writing for Parkinson’s patients. Experiments show RoFin achieves an 85% labeling accuracy at distances up to 2.5 meters, improves tracking granularity with 4× more sampled points per frame, and reconstructs hand poses with a mean deviation error of just 16 mm compared to Leap Motion.

We’ve applied various ML models for our projects such as CycleGAN for underwater image denoising, omnidirectional localization, user ID identification, etc. The most significant scientific contribution I would like to make is in exploring light as a medium for ubiquitous wireless communication and sensing by utilizing AI and IoT technologies.